Reinforcement learning (RL) is about learning through interaction: an agent takes actions in an environment and improves its behaviour using feedback in the form of rewards. Many RL methods struggle with a basic trade-off: some approaches learn stable value estimates but act slowly, while others learn fast policies but can be noisy and unstable. Actor-critic methods are designed to balance both. They use two models at the same time—an Actor that chooses actions and a Critic that evaluates how good those actions are. If you are exploring modern RL as part of a data scientist course in Chennai, actor-critic is a key architecture to understand because it underpins many production-grade algorithms.

The Core Idea: Actor and Critic, Two Complementary Roles

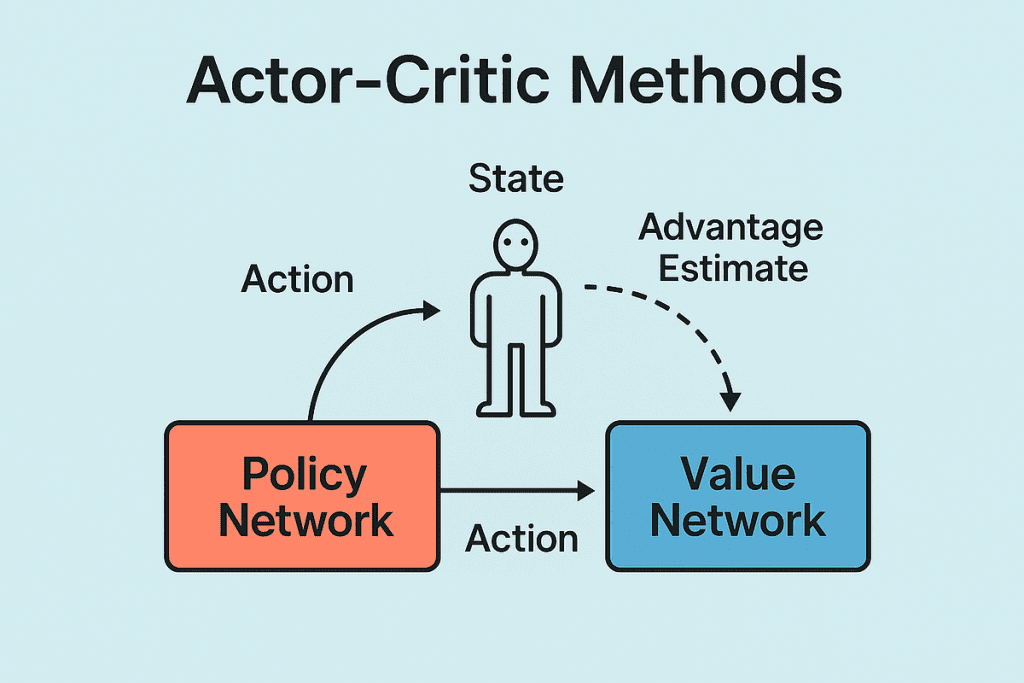

In actor-critic methods, the Actor represents the policy. It outputs what action to take given the current state. The Actor can be deterministic (directly outputs an action) or stochastic (outputs a probability distribution over actions).

The Critic estimates how good the current situation is. Typically, it learns a value function such as:

- V(s): the expected return from state s, or

- Q(s, a): the expected return from taking action a in state s.

The Critic acts like a coach. It does not directly choose actions. Instead, it tells the Actor whether its choices were better or worse than expected. This feedback helps the Actor update its policy in a more informed way than using raw rewards alone.

Why Actor-Critic Works Better Than Pure Policy Gradients

Pure policy gradient methods update the policy by increasing the probability of actions that produced higher returns. This can work, but it often has high variance. High variance means training can be unstable, especially in complex environments.

Actor-critic reduces this problem by using the Critic as a baseline. Instead of asking “Was the return high?”, the Actor asks a sharper question: “Was the outcome better than what the Critic expected?” This is captured by a quantity called the advantage:

A(s, a) = Q(s, a) − V(s)

If the advantage is positive, the action was better than expected, so the Actor should become more likely to repeat it. If the advantage is negative, the Actor should avoid it in the future.

This combination—policy learning plus value-based feedback—is one reason actor-critic methods are common in advanced RL modules in a data scientist course in Chennai.

How the Learning Loop Typically Works

Even though implementations vary, many actor-critic systems follow the same loop:

- Collect experience: run the current policy to gather trajectories (states, actions, rewards).

- Update the Critic: train the value function to better predict returns.

- Compute advantage estimates: use the Critic to measure how good each action was relative to expectation.

- Update the Actor: adjust policy parameters to favour actions with positive advantage and reduce those with negative advantage.

- Repeat until performance stabilises.

A key detail is that the Critic must be reasonably accurate. If the Critic is wrong, it can mislead the Actor. This is why many practical algorithms focus heavily on stabilising value learning.

Popular Actor-Critic Variants You’ll See in Practice

Actor-critic is a family, not a single algorithm. Here are widely used variants and what they add:

- A2C (Advantage Actor-Critic): a synchronous version that uses advantage estimates to train the Actor.

- A3C (Asynchronous Advantage Actor-Critic): multiple workers explore in parallel, which improves exploration and training speed.

- DDPG (Deep Deterministic Policy Gradient): designed for continuous action spaces with a deterministic Actor and a Critic that learns Q-values.

- SAC (Soft Actor-Critic): adds an entropy term so the policy stays exploratory; often strong and stable for continuous control.

- PPO (Proximal Policy Optimisation): commonly treated as actor-critic because it uses a value function Critic and a clipped policy update for stability.

You do not need to memorise every detail at first. The important point is that many state-of-the-art RL systems are built on the actor-critic pattern.

Where Actor-Critic Shines, and Common Pitfalls

Actor-critic methods work well when:

- the action space is large or continuous,

- the environment is complex and requires learning a direct policy,

- you need better sample efficiency than simple policy gradients.

However, there are pitfalls:

- Unstable training if the Critic learns poorly or drifts.

- Bias-variance trade-offs in advantage estimation; techniques like Generalised Advantage Estimation (GAE) help.

- Exploration problems if the Actor becomes too confident too early.

- Reward design issues that can lead to unintended behaviour.

Understanding these risks is part of becoming effective at RL engineering, and it is often addressed through practical experiments in a data scientist course in Chennai.

Conclusion

Actor-critic methods combine the best of two worlds: the Actor learns what to do, and the Critic learns how good those actions are. By using advantage-based feedback, actor-critic typically trains more stably than plain policy gradients while still learning flexible policies for complex tasks. From A2C and PPO to SAC and DDPG, the actor-critic architecture is central to modern reinforcement learning. If RL is on your learning roadmap—especially through a data scientist course in Chennai—mastering this pattern will help you understand how many real-world RL systems are designed and trained.